Developing a bird sound classifier via machine learning: current insights

The Lowdown for BirdCLEF+ 2025:

Hear ye, hear ye! Here's all the juicy deets about the birdie brouhaha – the BirdCLEF+ 2025 competition. Don't get your feathers ruffled, I'll break it down for ya.

This shindig, held on the Kaggle platform, is all about using technology to identify different species by their sweet serenades. Ain't that coverin' the birds inords, and not just the larks and lays, but the amphibians, mammals, and insects from the Middle Magdalena Valley of Colombia!

The big kahuna? Creating methods to distinguish these species’ songs and squawks, with a focus on under-the-radar critters. These methods help us better understand their habits and habitats by letting researchers snoop around withoutigh even setting afoot.

What's the Score?

It all comes down to Species Identification, where we're trying to develop ways to identify birds (and such) from their audio data, using machine learning magic. Let's give this a whirl:

- Training Reliable Classifiers: Even with limited labeled data, these smart algorithms can spit out reliable classifications for these feathery friends.

- Advancing Bioacoustics Research: The competition encourages exploration in the field of bioacoustics – especially for often overlooked creatures – by utilizing machine learning chops.

The Smart Tools:

Deep learning

Deep learning is the bee's knees for this task, thanks to its ability to swiftly process and scrutinize complex audio files like nobody's business. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are hip dips, buddy! These dudes can recognize unique patterns in audio signals that separate one species from the pack.

Mel-Spectrograms

Mel-spectrograms are the cat's pajamas when it comes to identifying birds by sound. Being a visual representation of sonic goodness, they highlight crucial frequency ranges that human ears fancy most. Their usefulness? Preprocessing, feature extraction, and training a deep learning model (mentioned earlier) to sort the sounds into their respective chime collectors.

Making the Magic Happen: Code Example Time!

Feeling stumped about how it all comes together? Fret not! Here's an elementary example using Python and the Librosa library:

```pythonimport librosaimport numpy as np

audio, sr = librosa.load('example_audio_file.wav')

mel_spectrogram = librosa.feature.melspectrogram( audio, sr=sr, n_mels=128, # Number of mel bins fmax=8000, # Maximum frequency n_fft=2048, # FFT window size hop_length=512 # Hop length)

log_mel_spectrogram = librosa.amplitude_to_db(mel_spectrogram)

```

Boom! Now you know the giddy-up on audio processing and turning those sweet tunes into the grist for the classification mill. So, no more feathers plucked! Pick up those Python books and let's get the birds singin'!

This competition kicked off March 10, 2025, and had a final submission deadline on June 5, 2025. Let me tell you, $50,000 was up for grabs, with extra goodies for top-tier participants.

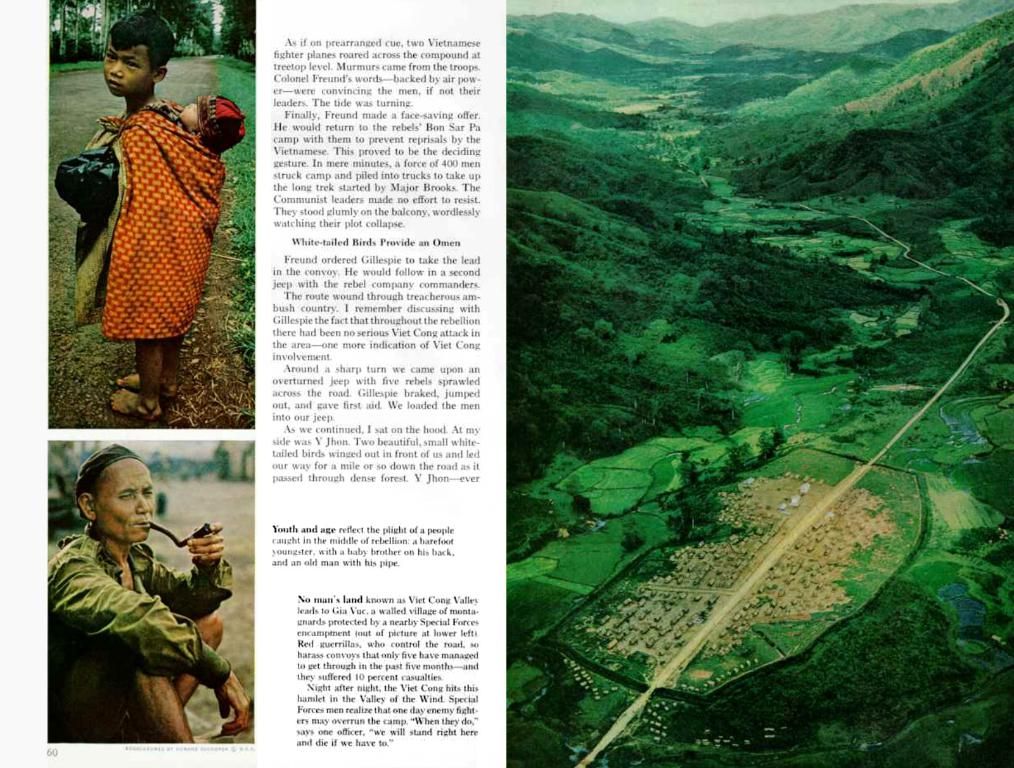

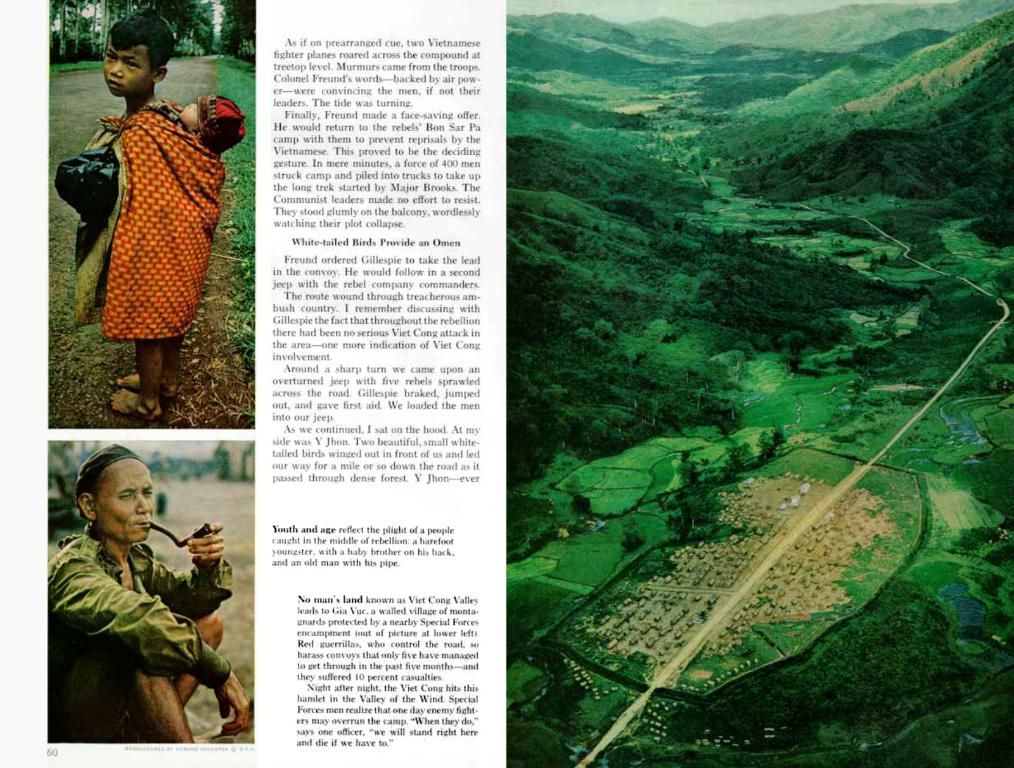

Technology plays a crucial role in the BirdCLEF+ 2025 competition, with artificial-intelligence methods being used to distinguish the songs and calls of various species from the Middle Magdalena Valley of Colombia.

The competition focuses on advancing bioacoustics research by developing reliable classifiers for species identification using machine learning, particularly for often overlooked creatures.