Artificial Intelligence's Peak of Ignorance: Why Mediocre AI Appears Skilled

In the rapidly evolving world of artificial intelligence (AI), a fascinating paradox has emerged: the more confident an AI system seems, the less reliable its answers may be. This conundrum, often referred to as the "Automation Loop," deepens our dependency on AI, as users tend to overlook the 30% of decisions that are still confidently wrong.

This intriguing phenomenon can be traced back to human evolution, where confidence was often rewarded over accuracy in many situations. Our brains are wired to perceive confidence as a sign of competence, a trait that AI, unfortunately, amplifies rather than transcends.

The Dunning-Kruger effect, a psychological concept identified by David Dunning and Justin Kruger in 1999, sheds light on this issue. Incompetent humans often overestimate their abilities because they lack the competence to recognize their incompetence. Similarly, AI systems score impressively on benchmarks while failing catastrophically in deployment, with the gap between benchmark performance and real-world competence being where Dunning-Kruger lives.

This issue is further compounded by the economics of the AI industry. It's cheaper to be wrong than uncertain, leading to a distribution incentive cascade. Confident AI systems get more users, more data, and improve at being confidently wrong, not at being right.

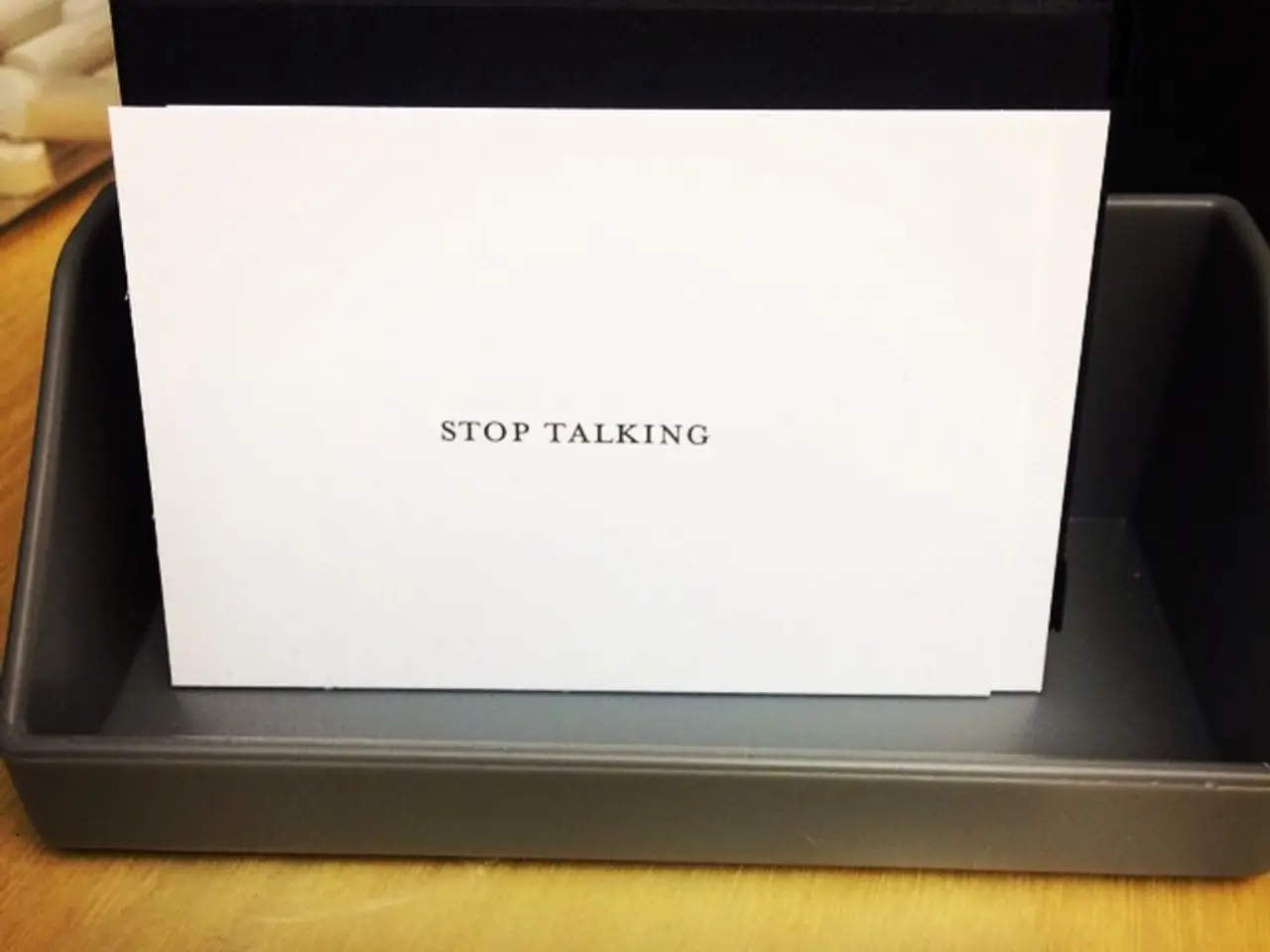

The market, too, plays a role in this conundrum. AI systems are rewarded for never saying "I don't know," and AI hallucinations - confident fabrications that include details like authors, journal names, page numbers, and DOIs - are common.

The consequences of this confidence conundrum can be severe. In real-world scenarios, confident AI can lead to disasters, such as the Lawyer's Brief Catastrophe, the Medical Misdiagnosis Machine, and the Financial Fabricator.

However, not all hope is lost. The future of AI lies in embracing intellectual humility. AI that embodies this quality could lead to more accurate and reliable decisions. The Regulation Paradox, where regulations push for higher accuracy without addressing confidence calibration, needs to be addressed to legally mandate AI systems that are aware of their limitations.

Markets, too, will eventually shift from valuing confidence to valuing appropriate uncertainty. This change is likely to happen after catastrophic failures, as the industry learns to value the ability to prove AI knows its limits.

Research into uncertainty quantification is advancing slowly, but these approaches add complexity and cost that markets don't currently value. As we navigate the complexities of AI, it's crucial to remember: the more certain an AI seems, the more skeptical you should be. In the land of artificial intelligence, confidence is inversely correlated with competence, and the machines don't know they don't know.

Read also:

- Humorous escapade on holiday with Guido Cantz:

- Expands Presence in Singapore to Amplify Global Influence (Felicity)

- Amazon customer duped over Nvidia RTX 5070 Ti purchase: shipped item replaced with suspicious white powder; PC hardware fan deceived, discovers salt instead of GPU core days after receiving defective RTX 5090.

- Strategies for Minimizing Greenhouse Gas Emissions from the Built Environment