AI-manufactured voice deceptions pose threat, with perpetrators masquerading as government figures.

Navigating Trust in the AI Era: Perspectives from Pindrop, Anonybit, and Validsoft

In the fast-paced world of agentic AI, understanding the changing landscape of fraud and security has never been more crucial. Let's delve into some key insights from Pindrop, Anonybit, and Validsoft.

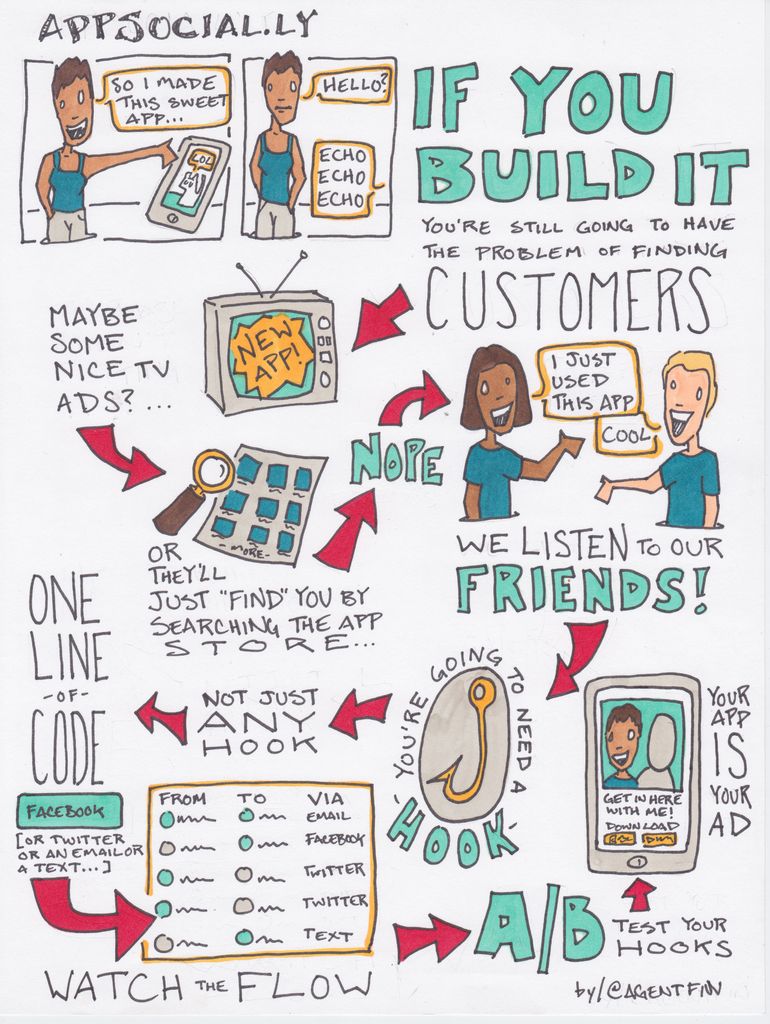

The Rising Tide of Deception

The advent of widely available generative AI tools has fueled a staggering surge in deceptive tactics such as deepfake and synthetic voice scams. Pindrop warns that one out of every 599 calls is fraudulent, expecting a 162% increase in deepfake attacks by 2025[1][2].

Agentic AI allows machines to mimic human speech, act independently, and launch large-scale impersonation attacks at an unprecedented scale. This transformation aids fraudsters in launching automated mass attacks, making sophisticated schemes more accessible[1].

Challenges in the Trust and Security Realm

The escalating use of synthetic voices and biometric injection attacks in account inquiries and credential provision calls traditional verification methods into question. This shifts the risk factor for both organizations and individuals[1]. As the number of deepfake and synthetic voice incidents grows, maintaining customer confidence and trust in digital and voice interactions becomes paramount[2].

Strategic Responses and Solutions

Companies such as Pindrop are pioneering advanced fraud detection solutions, leveraging cutting-edge audio, voice, and AI technology to identify and combat deepfake-driven threats in real-time. These systems prioritize detecting synthetic voices, protecting high-value transactions, and neutralizing social engineering attacks before damage ensues[2][4].

To remain at the forefront of security, businesses must be agile in adapting to the rapidly evolving agentic AI landscape. This necessitates constant innovation in fraud detection and prevention[2][3]. As the threat landscape expands, collaboration between organizations and technology providers like Pindrop, Anonybit, and Validsoft becomes a critical component in maintaining trust within the digital ecosystem[1][4].

| Insight Area | Key Point ||-----------------------------|--------------------------------------------------------|| Fraud Exposure | Anticipate a 162% rise in deepfake fraud by 2025[1] || AI Impact | Agentic AI scales impersonation attacks, empowers fraudsters[1] || Biometric Threat | Synthetic voice and injection attacks on the rise[1] || Customer Trust | Maintaining customer confidence crucial for trust[2] || Solution Approach | Advanced detection using AI and biometrics; real-time mitigation[2][4] || Innovation Need | Ongoing evolution of detection tools to match advancing AI threats[2][3] |

Embracing these insights underscores the urgent need for robust, forward-thinking security measures to safeguard trust in digital communications and transactions against the encroaching influence of agentic AI[1][2][4].

In the light of the increasing infiltration of artificial-intelligence-driven deepfake fraud predicted to surge by 162% by 2025[1], general-news outlets should emphasize the importance of artificial-intelligence advancements in fraud detection, as advocated by companies like Pindrop, to ensure crime-and-justice issues associated with this technology are effectively addressed[2][4]. To counter the looming threat from synthetic voice and biometric injection attacks, it's crucial for businesses to invest in strategic solutions that utilize cutting-edge AI technology, as demonstrated by Pindrop, in order to maintain public trust and secure digital communications[1][2].